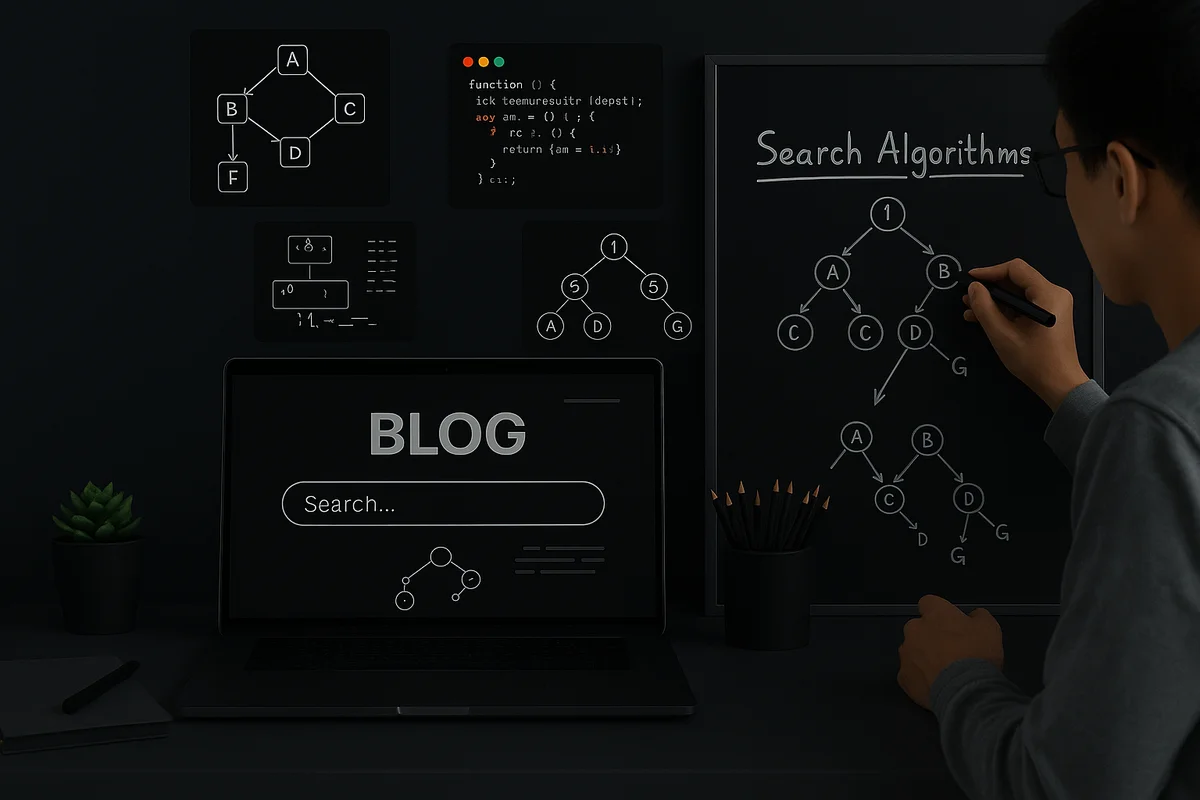

Over-Engineering a Search Feature for My 7-Post Blog: A Developer's Learning Journey

Hybrid search for my Astro blog: cached in-memory index, a lightweight /api/search.json endpoint, and Fuse.js for fuzzy relevance fast, cost‑effective, and fully under my control.

Let me start with a confession: I just spent two full weekends building a sophisticated search system for my blog. How many posts does my blog have? Seven. Yes, you read that right seven posts that you could easily browse through in under two minutes.

Am I crazy? Probably. Do I regret it? Absolutely not.

This is the story of how I deliberately over-engineered a search feature, the technical challenges I faced, and why sometimes building something “unnecessary” is exactly what you need to grow as a developer.

The “Problem” That Wasn’t Really a Problem

Here’s the thing my blog didn’t actually need search functionality. With seven posts neatly organized by categories and tags, finding content was hardly a challenge. A simple scroll through the homepage would reveal everything I’d ever written.

But that’s not how my developer brain works.

I’m the kind of person who thinks three steps ahead, who sees a small project and immediately starts planning for scale. While looking at my modest blog, I didn’t see seven posts I saw the potential for seventy, or seven hundred. I started asking myself questions that kept me up at night:

- What happens when I have 50+ posts spread across multiple categories?

- How will readers find that specific tutorial they remember from months ago?

- What if someone wants to search for content within posts, not just titles?

The rational part of my brain knew I was getting ahead of myself. The engineer part of my brain was already sketching out search architectures.

And honestly? I just wanted to learn how to build search functionality properly. Personal projects are the perfect playground for experimenting with technologies you might not get to touch in your day job.

The Great Search Solution Analysis

Option 1: The “Just Google It” Approach

My first thought was simple: let users rely on Google’s site search with site:myblog.com search-term. Zero implementation effort, professional-grade results, and it would scale automatically.

But where’s the fun in that? Plus, it felt like admitting defeat before I’d even tried. I wanted to own the entire user experience, from the search box design to the relevance algorithm.

Option 2: Third-Party Search Services

Services like Algolia or Elasticsearch Cloud crossed my mind. They offer powerful search capabilities with minimal setup, and the free tiers would easily handle my traffic.

The problem? I’m building this as a hobby project, and I have a strict “no recurring costs for fun projects” policy. More importantly, I wanted to understand how search actually works under the hood, not just plug in someone else’s black box.

Option 3: Client-Side Search

I considered building a purely client-side solution generate a JSON index at build time and use a library like Lunr.js or Fuse.js to search it in the browser.

This felt promising for a static site, but I was concerned about the future. What happens when my “JSON index of everything” becomes several megabytes? Do I really want to ship that to every visitor’s browser?

Option 4: The Custom Server-Side Solution

This is where my over-engineering tendencies kicked in full force. Why not build a custom search API that:

- Uses Astro’s content collections to dynamically read posts

- Implements intelligent caching to avoid rebuilding the search index on every request

- Leverages Fuse.js for fuzzy search capabilities

- Provides a clean JSON API that could theoretically handle thousands of posts

Was this overkill for seven posts? Absolutely. Did it sound like a fun learning challenge? You bet.

Building the Over-Engineered Solution

Weekend One: The API Architecture

I dove into building a search API endpoint at /api/search.json. The core challenge was figuring out how to efficiently serve search results without hammering the file system on every request.

My solution was an in-memory caching system:

let cache: CacheEntry | null = null;const CACHE_TTL = 1000 * 60 * 60; // 1 hour

async function getFuse(): Promise<{ fuse: Fuse<IndexedPost>; index: IndexedPost[] }> { if (cache && isCacheValid(cache)) { return { fuse: cache.fuse, index: cache.indexedPosts }; } // Build new cache...}The system reads all markdown files once, builds a Fuse.js search index, and keeps everything in memory for subsequent requests. It’s like having a tiny search engine that rebuilds itself when needed.

The Fuzzy Search Challenge

Here’s where things got interesting. Fuse.js is incredibly powerful, but getting the configuration right took more trial and error than I’d like to admit.

I spent hours tweaking these parameters:

const fuseOptions = { includeScore: true, keys: [ { name: 'title', weight: 0.6 }, { name: 'description', weight: 0.3 }, { name: 'category', weight: 0.25 }, { name: 'tags', weight: 0.25 }, { name: 'content', weight: 0.1 }, ], threshold: 0.4, ignoreLocation: true,};The threshold value was particularly tricky. Too low (0.1) and you get irrelevant results. Too high (0.8) and you miss obvious matches with minor typos. I settled on 0.4 after testing with various search queries.

Weekend Two: The User Interface

The backend was working, but I wanted the frontend to feel polished. I built a search interface with:

- Instant search with debounced queries (because nobody likes laggy search)

- Advanced filtering by categories and tags (future-proofing for when I have more content)

- Keyboard shortcuts like Ctrl+K to focus the search box (because power users deserve love)

- Smart pagination with ellipsis for large result sets (optimistic, I know)

- Loading states and error handling for a smooth experience

The trickiest part was getting the debouncing right. Too aggressive (100ms) and it felt sluggish. Too conservative (1000ms) and fast typers would trigger multiple requests. I settled on 300ms responsive enough for most users while preventing request spam.

Why I Don’t Regret Over-Engineering This

Looking back, was building a sophisticated search system for seven blog posts objectively ridiculous? Yes.

Was it worth it? Absolutely.

Here’s what I gained from this “unnecessary” project:

Technical Skills: I now understand fuzzy search algorithms, caching strategies, and the nuances of building responsive search interfaces. These are transferable skills I can apply to any future project.

Problem-Solving Experience: Debugging that score calculation issue taught me to question my assumptions and dig deeper into library documentation. The pagination logic helped me think through edge cases and user experience flows.

Future-Proof Foundation: When (if?) my blog grows to 100+ posts, I won’t need to rebuild the search system from scratch. The architecture can handle the scale.

Portfolio Piece: This project demonstrates full-stack thinking, attention to user experience, and the ability to build polished features. It’s the kind of project that shows I can think beyond immediate requirements.

Pure Learning Joy: Sometimes you build things just because they’re interesting. This project scratched that itch perfectly.

The Technical Lessons Learned

Caching Is Everything

The difference between reading files on every request vs. caching in memory is dramatic. My search API responds in under 50ms because everything lives in RAM after the initial build.

User Experience Details Matter

Features like keyboard shortcuts, loading states, and URL integration don’t take much extra effort but make the experience feel professional. These details separate hobby projects from production-quality software.

Over-Engineering Can Be Good Practice

Building robust solutions for simple problems teaches you to think systematically about architecture, error handling, and scalability. It’s mental training for when you face genuinely complex challenges.

Documentation and Testing Save Time

I spent more time debugging configuration issues than I would have if I’d read the Fuse.js documentation more carefully upfront. Lesson learned.

What’s Next?

Could I add Redis for even better caching? Sure, but that feels like crossing the line from “educational over-engineering” to “pointless complexity.” The in-memory cache works perfectly for my use case.

The more interesting question is what other “unnecessary” features I might build next. Real-time analytics? A recommendation engine? A comment system with sentiment analysis?

The beauty of personal projects is that you get to define what’s worth building.

Final Thoughts: Embrace the Over-Engineering

If you’re a developer reading this and thinking “that’s completely overkill for seven posts,” you’re absolutely right. But you’re also missing the point.

Personal projects aren’t always about solving pressing problems they’re about learning, experimenting, and pushing your skills forward. Sometimes the best way to understand a technology is to use it in a context where you don’t strictly need it.

The search system I built will serve me well if my blog grows, but more importantly, it taught me things I couldn’t have learned any other way. The next time I need to implement search functionality in a professional context, I’ll have the experience and confidence to make informed decisions.

So go ahead over-engineer that side project. Build the authentication system for your todo app that only you will ever use. Implement microservices for your personal portfolio site. Add machine learning to your grocery list app.

Your future self will thank you for it.

Related Posts

Building My Blog: Why I Chose Giscus for Comments

Why I integrated Giscus, a GitHub-powered open-source comment system, into my developer blog covering privacy, setup, pros, and tradeoffs.

- astro

- comments

- github

- open-source

How to Create Custom User Model in Django

Learn how to extend Django's built-in User model by creating a custom user model with AbstractUser class.

- django

- python

- authentication

- models

From Squiggly Words to Sliding Puzzles: The Ever-Evolving Saga of CAPTCHA

A journey through the evolution of CAPTCHA from distorted text to behavioral biometrics and the ongoing battle against bots.

- captcha

- security

- privacy

- ai